Exploring the Fundamentals of Kubernetes Architecture

This document aims to provide a foundational guide to Kubernetes architecture, covering a range of essential topics including basic concepts, commands, and detailed insights into application deployment processes. Designed to cater to both beginners and intermediate users, this guide serves as a comprehensive resource for anyone looking to explore the world of Kubernetes.

Whether you are taking your first steps into container orchestration or seeking to deepen your existing knowledge, this document will guide you through the intricacies of Kubernetes. It offers a clear and structured approach to understanding the platform’s key features and functionalities.

From understanding the core components that make up the Kubernetes ecosystem to mastering the basic commands necessary for effective cluster management, this guide aims to equip you with the knowledge needed to navigate the Kubernetes landscape confidently.

Furthermore, the document delves into advanced topics such as security with Role-Based Access Control (RBAC), resource management through auto-scaling, troubleshooting techniques including debugging pods, and an overview of Helm, the package manager for Kubernetes. These sections provide practical insights and real-world examples, enabling you to apply your learning in practical scenarios.

By the end of this guide, you will have a solid foundation in Kubernetes architecture, along with the skills to implement and manage Kubernetes-based applications effectively. This journey through Kubernetes architecture is not just about learning the concepts but also about understanding how to apply them in real-world situations.

So, let’s embark on this educational journey to master Kubernetes, one of the most powerful and widely used container orchestration platforms in the tech world today.

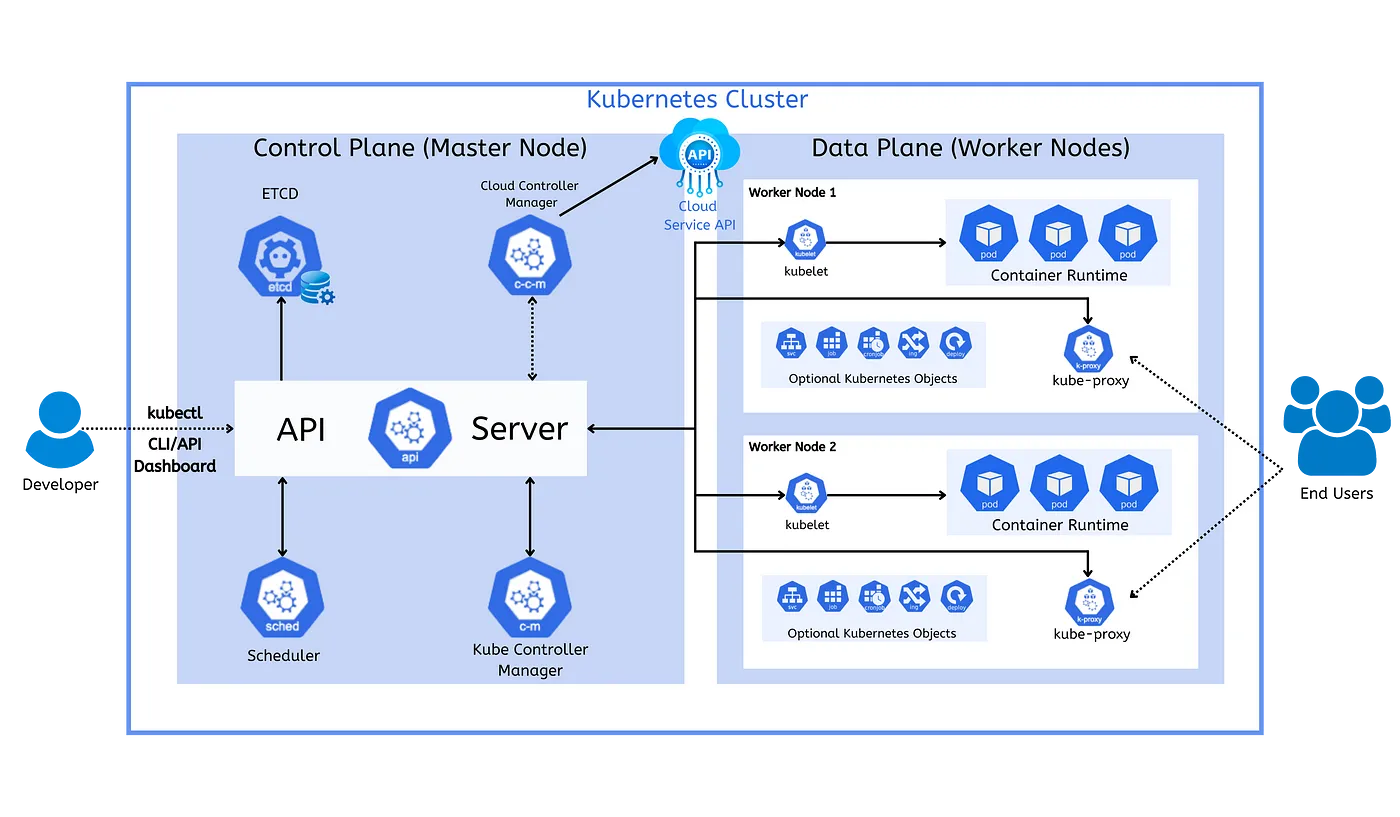

Components and Relationships within a Kubernetes Cluster:

Table of Contents

- Kubernetes Basic Concepts

- Kubernetes Basic Commands

- Kubernetes Application Deployment Process

- Security in Kubernetes: Role-Based Access Control (RBAC)

- Resource Management: Auto-Scaling

- Troubleshooting: Debugging Pods

- Package Manager for Kubernetes: Helm

Kubernetes Basic Concepts

Kubernetes is a powerful container orchestration tool that automates the deployment, scaling, and management of containerized applications. Understanding the following basic concepts is crucial for using Kubernetes.

-

Cluster: A collection of nodes (Node) that provides a set of computing resources, used to deploy and manage containerized applications.

-

Node: A node is the fundamental element of a cluster and can be a virtual or physical machine, responsible for running container applications.

-

Pod: The smallest deployable unit, typically contains one or more closely related containers.

-

Service: An abstract way to expose an application running on a set of Pods as a network service. Defines a way to access Pods, usually provides a stable IP address and port.

-

Deployment: Manages the creation and update of Pods, suitable for stateless applications.

-

ReplicaSet: Ensures that a specified number of Pod replicas are always running.

-

StatefulSet: Especially suitable for stateful applications that need persistent storage and unique network identifiers, like databases.

-

Namespace: Used to create multiple virtual clusters within the same physical cluster, divide resources into different virtual clusters.

-

Persistent Volume (PV): Provides persistent storage for Pods.

-

Persistent Volume Claim (PVC): A request for storage resources by a user.

-

ConfigMap: Manages configuration options and stores non-sensitive data.

-

Secret: Stores sensitive information, such as passwords and keys.

-

Ingress: Manages access rules from the external network to services within the cluster.

Kubernetes Basic Commands

Familiarity with the following basic commands is key to effectively managing a Kubernetes cluster.

kubectl get nodes # List all nodes

kubectl create -f <filename> # Create resources using a configuration file

kubectl get pods # List all Pods

kubectl describe pod <pod-name> # View detailed information about a specific Pod

kubectl logs <pod-name> # Get logs from a Pod

kubectl exec -it <pod-name> -- /bin/bash # Enter the command line of a Pod

kubectl delete pod <pod-name> # Delete a specific Pod

kubectl apply -f <filename> # Apply changes from a configuration file

kubectl get svc # List all services

kubectl get deployments # List all deployments

kubectl scale deployment <deployment-name> --replicas=<num> # Adjust the number of replicas for a deployment

kubectl get namespace # List all namespaces

kubectl create namespace <name> # Create a new namespace

Kubernetes Application Deployment Process

The following is a typical process for deploying an application using Kubernetes, including basic steps and additional details.

-

Establishing a Cluster: First, build a Kubernetes cluster consisting of multiple nodes. This can be done on cloud platforms like AWS, GCP, Azure, or on-premises.

-

Deploying Pods: Create Pods for applications like frontend, backend, and database within the cluster. Pods are the smallest deployable units and can host one or more containers.

- Frontend Pod: Hosts the user interface.

- Backend Pod: Runs the application logic.

- Database Pod: Stores application data persistently.

-

Configuring Services: Create Services for the frontend and backend applications to enable internal and external communication. Services provide a stable endpoint for accessing Pods.

-

Implementing Ingress: Set up Ingress for managing external access to the services within the cluster, typically the frontend service. Ingress allows you to define rules for external connectivity.

-

Using ConfigMaps and Secrets: Deploy ConfigMaps for managing environment configurations and Secrets for sensitive data. This ensures that configurations and sensitive data are managed and deployed separately from the container image.

-

Persistent Storage with PV and PVC: Implement Persistent Volumes (PV) and Persistent Volume Claims (PVC) for data storage. This is crucial for database Pods to ensure data is not lost when the Pod is restarted or moved.

-

Monitoring and Logging: Set up monitoring and logging tools to keep track of the application’s performance and troubleshoot issues. Tools like Prometheus for monitoring and ELK Stack for logging can be integrated.

-

Scaling and Load Balancing: Use ReplicaSets or Deployments to manage the number of Pod replicas, ensuring high availability and load balancing.

-

Updating and Rolling Back: Perform rolling updates and rollbacks using Deployments. This allows you to update the application without downtime.

-

Namespace Usage: Use Namespaces to organize resources in the cluster and provide a scope for names. This helps in multi-tenant environments and resource management.

-

Clean-up Resources: Finally, it’s important to know how to clean up and remove resources from Kubernetes to manage resources efficiently.

This process encapsulates a general approach to deploying applications on Kubernetes and can vary based on specific requirements and configurations.

Security in Kubernetes: Role-Based Access Control (RBAC)

RBAC is a method of regulating access to computer or network resources based on individual roles within an organization. In Kubernetes, RBAC allows you to control who can access the Kubernetes API and what permissions they have.

Scenario and Example:

Imagine you have a team where developers should only be able to read Pods in a namespace, while admins can create or delete any resources in the namespace.

-

Define Roles: Create roles that define the permissions for a particular set of resources.

kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: default name: pod-reader rules: - apiGroups: [""] resources: ["pods"] verbs: ["get", "watch", "list"] -

Bind Roles to Users/Groups: Use RoleBindings to grant the defined roles to users or groups.

kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: read-pods namespace: default subjects: - kind: User name: jane apiGroup: rbac.authorization.k8s.io roleRef: kind: Role name: pod-reader apiGroup: rbac.authorization.k8s.io

Resource Management: Auto-Scaling

Auto-scaling in Kubernetes allows applications to adjust to the workload dynamically. There are two types of auto-scaling: Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA).

Scenario and Example:

If your web application experiences varying traffic, you might want to automatically scale the number of pods up or down based on CPU utilization or other select metrics.

- Horizontal Pod Autoscaler: Automatically scales the number of Pods in a replication controller, deployment, or replica set based on observed CPU utilization.

kubectl autoscale deployment my-web-app --cpu-percent=50 --min=1 --max=10This command creates an HPA that maintains between 1 and 10 replicas of the Pods controlled by the

my-web-appdeployment, scaling up when the CPU exceeds 50% of the allocated resource.

Troubleshooting: Debugging Pods

Understanding how to troubleshoot and debug Pods in Kubernetes is essential for maintaining healthy workloads.

Scenario and Example:

A Pod is in a CrashLoopBackOff state and you need to find out why.

- Inspect Pod Logs: First, check the logs of the problematic Pod.

kubectl logs <pod-name> - Exec into the Pod: If logs don’t reveal enough, you can exec into the pod for a more detailed investigation.

kubectl exec -it <pod-name> -- /bin/bash - Describe Pod: Use describe to get more details, especially if the Pod is failing to start.

kubectl describe pod <pod-name>

Package manager for Kubernetes: Helm

Helm is a package manager for Kubernetes, akin to apt or yum in Linux. It allows users to define, install, and upgrade Kubernetes applications more easily.

Core Concepts of Helm

- Chart: A Helm package containing all resource definitions needed to run an application on Kubernetes.

- Repository: A place where charts are stored and shared.

- Release: An instance of a chart running in a Kubernetes cluster.

Chart Introduction

A Chart is a collection of files that describe a related set of Kubernetes resources. A single chart might be used to deploy something simple, like a memcached pod, or something complex, like a full web app stack with HTTP servers, databases, caches, and so on.

Chart Structure

A typical chart structure looks like this:

mychart/

├── Chart.yaml # A YAML file containing information about the chart

├── values.yaml # The default configuration values for this chart

├── charts/ # A directory containing any charts upon which this chart depends.

├── templates/ # A directory of templates that, when combined with values, will generate valid Kubernetes manifest files.

└── crds/ ## Optional: Custom Resource Definitions

Installing Helm

- Download the Helm binary.

- Unpack and move it to an appropriate directory.

- Run

helm initto initialize Helm and Tiller (if using Helm 2).

Basic Usage of Helm

- Add Repository:

helm repo add [name] [url] - Search Chart:

helm search repo [keyword] - Install Chart:

helm install [name] [chart] - Upgrade Release:

helm upgrade [release] [chart] - Rollback Release:

helm rollback [release] [revision] - List Releases:

helm list - Delete Release:

helm delete [release]

Benefits of Using Helm

- Simplified Deployment Process: Simplifies the deployment of Kubernetes applications using predefined templates.

- Version Control and Sharing: Easily manage and share different versions of application configurations.

- Ease of Upgrades and Rollbacks: Provides simple commands for upgrading and rolling back applications.

Learning Resources

- Official Helm Documentation: Helm Docs

Helm is an essential tool in the Kubernetes ecosystem for managing complex Kubernetes applications.

If you found this post useful, feel free to bookmark, share, or follow my blog at astromen.github.io!